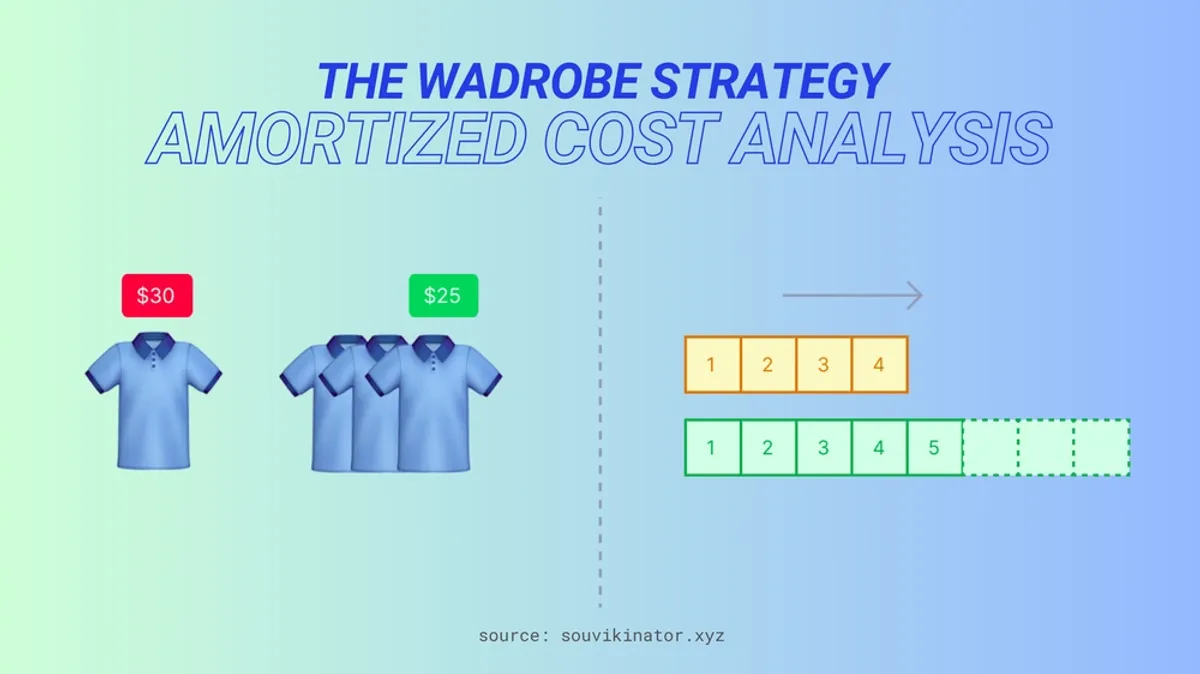

Imagine you just landed your dream job, and you need 12 new shirts for your work wardrobe. You have two options:

Option 1

- You could buy one $30 shirt each month, spreading the cost over a year.

- It feels easier on your wallet right now, But by the end of the year, you’ll have spent $360

- Let’s not forget the twelve separate trips to the store 😅

Option 2

- You could buy all 12 shirts at once. The store offers a bulk discount of $25 per shirt.

- Yes, it’s a bigger upfront cost of 60 overall. 3. And again let’s not forget the extra shopping trips.

This simple clothing dilemma illustrates one of the most powerful concepts in computer science: amortized cost analysis.

Just as smart shoppers think about long-term savings rather than just the immediate cost, efficient software systems often make similar trade-offs: investing resources upfront to gain better performance over time.

What’s amortized cost?

This “averaging out” effect (learnt previously) is what computer scientists call amortized cost analysis.

Instead of looking at what each individual operation costs, we consider the total cost spread across all operations. Sometimes, like with our bulk shirt purchase, paying more upfront leads to significant savings over time.

But when do we know we need amortized analysis?

Growing Collections

“Pay a little extra now to avoid paying a lot more later”

Real-world example: HashMap doubles its size and rehashes elements when it’s about 75% full

Resource Management

“Invest in bulk upfront to make individual operations cheaper”

Real-world example: Database connection pools create several connections upfront rather than making a new connection for each request

Performance Optimization

“Regular maintenance keeps everything running smoothly”

Spend time organizing books properly (balancing a B-tree) Takes extra effort during insertion (rebalancing operations) But finding any book remains consistently fast (O(log n) search) Without organization, finding a book could require checking every shelf (O(n) search)

Understanding using simple maths

Let’s take the dynamic array example and see how it plays out.

Approach 1: Grow by one

We increase our array size by just one slot each time we ran out of space. For 8 insertions starting with size 4:

First 4 insertions: 1 operation each = 4 operations

5th insertion: Create new array (size 5) + Copy 4 elements + Insert = 6 operations

6th insertion: Create new array (size 6) + Copy 5 elements + Insert = 7 operations

7th insertion: Create new array (size 7) + Copy 6 elements + Insert = 8 operations

8th insertion: Create new array (size 8) + Copy 7 elements + Insert = 9 operations

Total cost = 4 + 6 + 7 + 8 + 9 = 34 operations

Cost per operation = 34/8 ≈ 4.25 operationsApproach 2: Doubling strategy

First 4 insertions: 1 operation each = 4 operations

5th insertion: Create new array (size 8) + Copy 4 elements + Insert = 6 operations

Next 3 insertions: 1 operation each = 3 operations

Total cost = 4 + 6 + 3 = 13 operations

Cost per operation = 13/8 ≈ 1.6 operationsThe Long-Term Impact:

If we extend this to inserting 1000 elements:

Grow-by-one approach:

Each time we’re full, we need to copy all previous elements

Total operations ≈ n + (n * (n-1))/2

For n=1000, that’s about 499,500 operations!

Doubling approach:

We double at sizes 4, 8, 16, 32, 64, 128, 256, 512

Total operations ≈ n + n = 2n

For n=1000, that’s about 2,000 operations

This is why we say doubling gives us O(1) amortized time - even as n grows very large, the cost per operation stays constant.

Common Misconceptions

“Amortized Cost Means Average Cost”

Not quite. Average cost considers the typical case, while amortized cost gives a guaranteed bound over any sequence of operations.

Key Takeaways

Think Long-Term: Amortized analysis helps us understand the true cost of operations over time, not just individual operations. Sometimes paying more upfront leads to better overall performance.

When to Use It:

- Your data structure needs to grow dynamically

- You’re optimizing for overall performance rather than individual operations

- You have expensive operations that enable faster future operations

The Big Picture: Amortized analysis teaches us that optimization isn’t just about making each operation fast - sometimes it’s better to occasionally do more work to enable many fast operations later.

So there you have it! The next time someone asks you why you’re buying 12 shirts at once or hoarding database connections like they’re limited edition Pokémon cards, you can confidently explain that you’re not just being quirky – you’re implementing amortized analysis in real life!